History of computing hardware

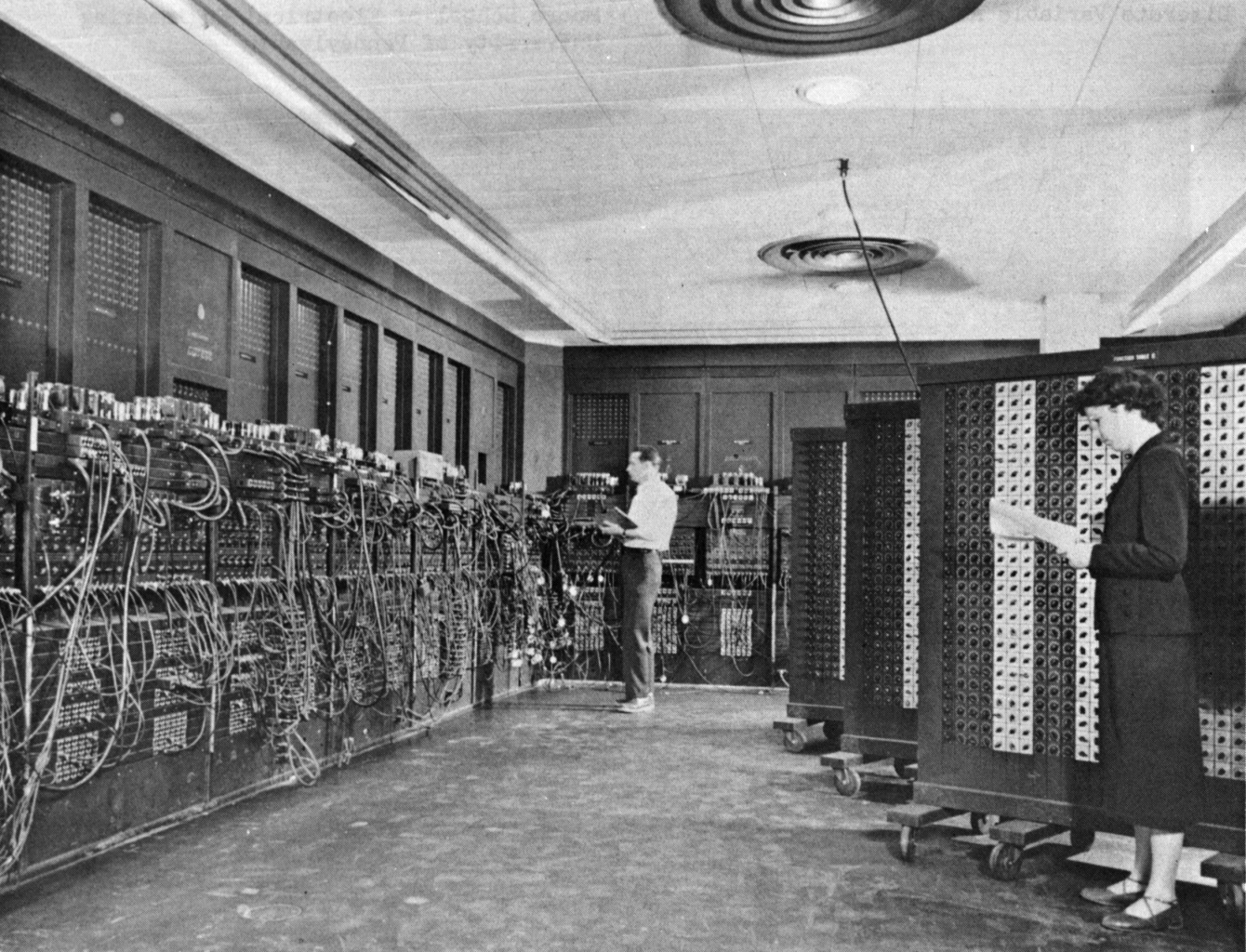

The history of computing hardware covers the developments from early simple devices to aid calculation to modern day computers.

The first aids to computation were purely mechanical devices which required the operator to set up the initial values of an elementary arithmetic operation, then manipulate the device to obtain the result. Later, computers represented numbers in a continuous form (e.g. distance along a scale, rotation of a shaft, or a voltage). Numbers could also be represented in the form of digits, automatically manipulated by a mechanism. Although this approach generally required more complex mechanisms, it greatly increased the precision of results. The development of transistor technology and then the integrated circuit chip led to a series of breakthroughs, starting with transistor computers and then integrated circuit computers, causing digital computers to largely replace analog computers. Metal-oxide-semiconductor (MOS) large-scale integration (LSI) then enabled semiconductor memory and the microprocessor, leading to another key breakthrough, the miniaturized personal computer (PC), in the 1970s. The cost of computers gradually became so low that personal computers by the 1990s, and then mobile computers (smartphones and tablets) in the 2000s, became ubiquitous.

Epilogue[edit]

An indication of the rapidity of development of this field can be inferred from the history of the seminal 1947 article by Burks, Goldstine and von Neumann.[194] By the time that anyone had time to write anything down, it was obsolete. After 1945, others read John von Neumann's First Draft of a Report on the EDVAC, and immediately started implementing their own systems. To this day, the rapid pace of development has continued, worldwide.[q][r]