Group delay and phase delay

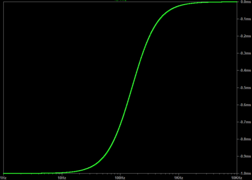

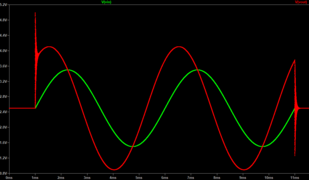

In signal processing, group delay and phase delay are two related ways of describing how a signal's frequency components are delayed in time when passing through a linear time-invariant (LTI) system (such as a microphone, coaxial cable, amplifier, loudspeaker, telecommunications system, ethernet cable, digital filter, or analog filter). Phase delay describes the time shift of a sinusoidal component (a sine wave in steady state). Group delay describes the time shift of the envelope of a wave packet, a "pack" or "group" of oscillations centered around one frequency that travel together, formed for instance by multiplying (amplitude modulation) a sine wave by an envelope (such as a tapering function).

These delays are usually frequency dependent,[1] which means that different frequency components experience different delays. As a result, the signal's waveform experiences distortion as it passes through the system. This distortion can cause problems such as poor fidelity in analog video and analog audio, or a high bit-error rate in a digital bit stream. However, for the ideal case of a constant group delay across the entire frequency range of a bandlimited signal and flat frequency response, the waveform will experience no distortion.

True time delay[edit]

A transmitting apparatus is said to have true time delay (TTD) if the time delay is independent of the frequency of the electrical signal.[25][26] TTD allows for a wide instantaneous signal bandwidth with virtually no signal distortion such as pulse broadening during pulsed operation.

TTD is an important characteristic of lossless and low-loss, dispersion free, transmission lines. Telegrapher's equations § Lossless transmission reveals that signals propagate through them at a speed of for a distributed inductance L and capacitance C. Hence, any signal's propagation delay through the line simply equals the length of the line divided by this speed.