Regulation of artificial intelligence

The regulation of artificial intelligence refers to the development of public sector policies and laws for promoting and regulating artificial intelligence (AI). It is part of the broader regulation of algorithms.[1][2] The regulatory and policy landscape for AI is an emerging issue in jurisdictions worldwide, including for international organizations without direct enforcement power like the IEEE or the OECD.[3]

Since 2016, numerous AI ethics guidelines have been published in order to maintain social control over the technology.[4] Regulation is deemed necessary to both foster AI innovation and manage associated risks.

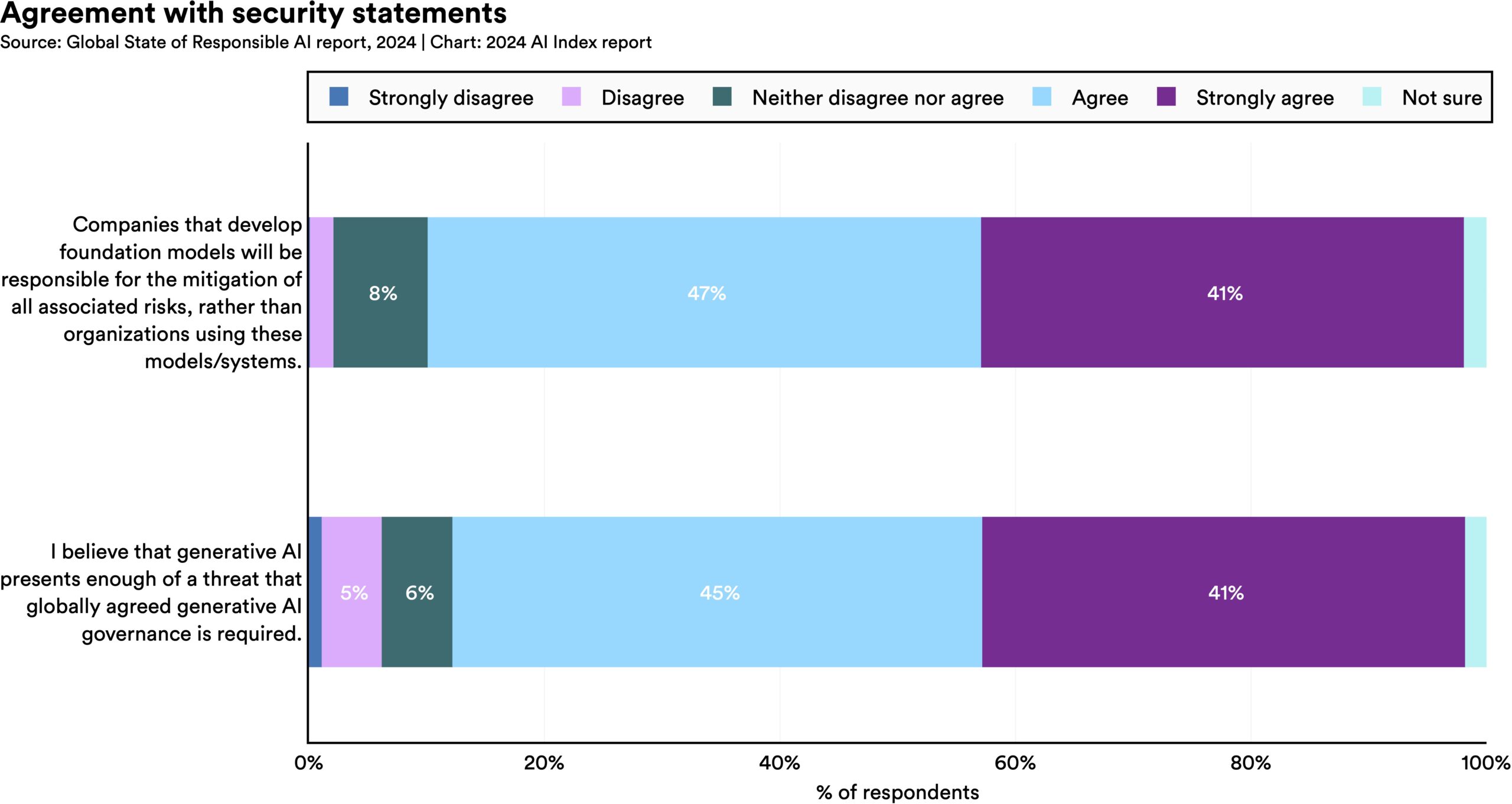

Furthermore, organizations deploying AI have a central role to play in creating and implementing trustworthy AI, adhering to established principles, and taking accountability for mitigating risks.[5]

Regulating AI through mechanisms such as review boards can also be seen as social means to approach the AI control problem.[6][7]

Background[edit]

According to Stanford University's 2023 AI Index, the annual number of bills mentioning "artificial intelligence" passed in 127 surveyed countries jumped from one in 2016 to 37 in 2022.[8][9]

In 2017, Elon Musk called for regulation of AI development.[10] According to NPR, the Tesla CEO was "clearly not thrilled" to be advocating for government scrutiny that could impact his own industry, but believed the risks of going completely without oversight are too high: "Normally the way regulations are set up is when a bunch of bad things happen, there's a public outcry, and after many years a regulatory agency is set up to regulate that industry. It takes forever. That, in the past, has been bad but not something which represented a fundamental risk to the existence of civilization."[10] In response, some politicians expressed skepticism about the wisdom of regulating a technology that is still in development.[11] Responding both to Musk and to February 2017 proposals by European Union lawmakers to regulate AI and robotics, Intel CEO Brian Krzanich has argued that AI is in its infancy and that it is too early to regulate the technology.[12] Many tech companies oppose the harsh regulation of AI and "While some of the companies have said they welcome rules around A.I., they have also argued against tough regulations akin to those being created in Europe" [13] Instead of trying to regulate the technology itself, some scholars suggested developing common norms including requirements for the testing and transparency of algorithms, possibly in combination with some form of warranty.[14]

In a 2022 Ipsos survey, attitudes towards AI varied greatly by country; 78% of Chinese citizens, but only 35% of Americans, agreed that "products and services using AI have more benefits than drawbacks".[8] A 2023 Reuters/Ipsos poll found that 61% of Americans agree, and 22% disagree, that AI poses risks to humanity.[15] In a 2023 Fox News poll, 35% of Americans thought it "very important", and an additional 41% thought it "somewhat important", for the federal government to regulate AI, versus 13% responding "not very important" and 8% responding "not at all important".[16][17]