Three Laws of Robotics

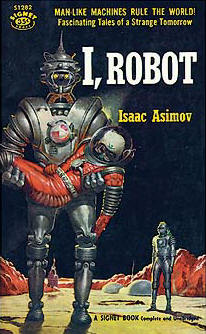

The Three Laws of Robotics (often shortened to The Three Laws or Asimov's Laws) are a set of rules devised by science fiction author Isaac Asimov, which were to be followed by robots in several of his stories. The rules were introduced in his 1942 short story "Runaround" (included in the 1950 collection I, Robot), although similar restrictions had been implied in earlier stories.

The Three Laws, presented to be from the fictional "Handbook of Robotics, 56th Edition, 2058 A.D.", are:[1]

Use in fiction[edit]

The Three Laws form an organizing principle and unifying theme for Asimov's robot-based fiction, appearing in his Robot series, the stories linked to it, and in his (initially pseudonymous) Lucky Starr series of young-adult fiction. The Laws are incorporated into almost all of the positronic robots appearing in his fiction, and cannot be bypassed, being intended as a safety feature. Many of Asimov's robot-focused stories involve robots behaving in unusual and counter-intuitive ways as an unintended consequence of how the robot applies the Three Laws to the situation in which it finds itself. Other authors working in Asimov's fictional universe have adopted them and references, often parodic, appear throughout science fiction as well as in other genres.

The original laws have been altered and elaborated on by Asimov and other authors. Asimov himself made slight modifications to the first three in subsequent works to further develop how robots would interact with humans and each other. In later fiction where robots had taken responsibility for government of whole planets and human civilizations, Asimov also added a fourth, or zeroth law, to precede the others.

The Three Laws, and the Zeroth, have pervaded science fiction and are referred to in many books, films, and other media. They have also influenced thought on the ethics of artificial intelligence.

Alterations[edit]

By Asimov[edit]

Asimov's stories test his Three Laws in a wide variety of circumstances leading to proposals and rejection of modifications. Science fiction scholar James Gunn writes in 1982, "The Asimov robot stories as a whole may respond best to an analysis on this basis: the ambiguity in the Three Laws and the ways in which Asimov played twenty-nine variations upon a theme".[18] While the original set of Laws provided inspirations for many stories, Asimov introduced modified versions from time to time.

Ambiguities and loopholes[edit]

Unknowing breach of the laws[edit]

In The Naked Sun, Elijah Baley points out that the Laws had been deliberately misrepresented because robots could unknowingly break any of them. He restated the first law as "A robot may do nothing that, to its knowledge, will harm a human being; nor, through inaction, knowingly allow a human being to come to harm." This change in wording makes it clear that robots can become the tools of murder, provided they not be aware of the nature of their tasks; for instance being ordered to add something to a person's food, not knowing that it is poison. Furthermore, he points out that a clever criminal could divide a task among multiple robots so that no individual robot could recognize that its actions would lead to harming a human being.[37] The Naked Sun complicates the issue by portraying a decentralized, planetwide communication network among Solaria's millions of robots meaning that the criminal mastermind could be located anywhere on the planet.

Baley furthermore proposes that the Solarians may one day use robots for military purposes. If a spacecraft was built with a positronic brain and carried neither humans nor the life-support systems to sustain them, then the ship's robotic intelligence could naturally assume that all other spacecraft were robotic beings. Such a ship could operate more responsively and flexibly than one crewed by humans, could be armed more heavily and its robotic brain equipped to slaughter humans of whose existence it is totally ignorant.[38] This possibility is referenced in Foundation and Earth where it is discovered that the Solarians possess a strong police force of unspecified size that has been programmed to identify only the Solarian race as human. (The novel takes place thousands of years after The Naked Sun, and the Solarians have long since modified themselves from normal humans to hermaphroditic telepaths with extended brains and specialized organs) Similarly, in Lucky Starr and the Rings of Saturn Bigman attempts to speak with a Sirian robot about possible damage to the Solar System population from its actions, but it appears unaware of the data and programmed to ignore attempts to teach it about the matter.

Ambiguities resulting from lack of definition[edit]

The Laws of Robotics presume that the terms "human being" and "robot" are understood and well defined. In some stories this presumption is overturned.

Criticisms[edit]

Philosopher James H. Moor says that if applied thoroughly they would produce unexpected results. He gives the example of a robot roaming the world trying to prevent harm from befalling human beings.[67]