Content moderation

On Internet websites that invite users to post comments, content moderation is the process of detecting contributions that are irrelevant, obscene, illegal, harmful, or insulting, in contrast to useful or informative contributions, frequently for censorship or suppression of opposing viewpoints. The purpose of content moderation is to remove or apply a warning label to problematic content or allow users to block and filter content themselves.[1]

Various types of Internet sites permit user-generated content such as comments, including Internet forums, blogs, and news sites powered by scripts such as phpBB, a Wiki, or PHP-Nuke etc. Depending on the site's content and intended audience, the site's administrators will decide what kinds of user comments are appropriate, then delegate the responsibility of sifting through comments to lesser moderators. Most often, they will attempt to eliminate trolling, spamming, or flaming, although this varies widely from site to site.

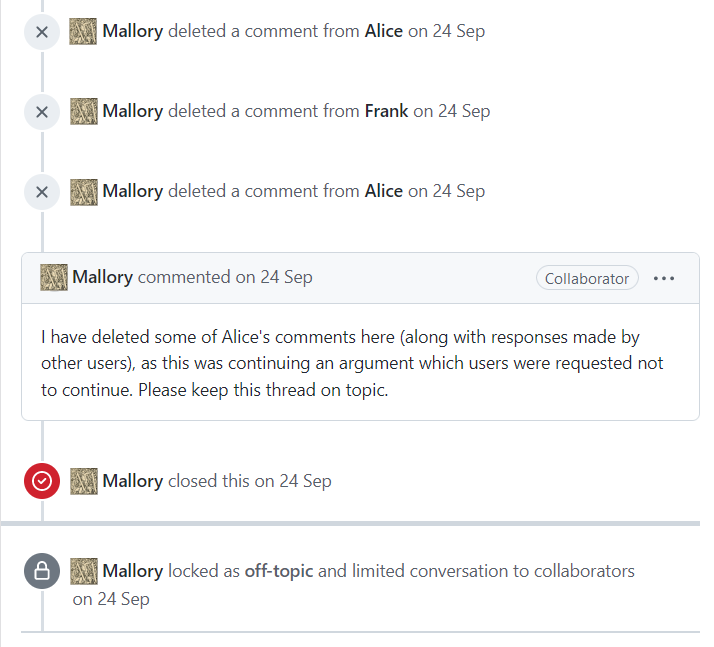

Major platforms use a combination of algorithmic tools, user reporting and human review.[1] Social media sites may also employ content moderators to manually inspect or remove content flagged for hate speech or other objectionable content. Other content issues include revenge porn, graphic content, child abuse material and propaganda.[1] Some websites must also make their content hospitable to advertisements.[1]

In the United States, content moderation is governed by Section 230 of the Communications Decency Act, and has seen several cases concerning the issue make it to the United States Supreme Court, such as the current Moody v. NetChoice, LLC.

Distributed moderation[edit]

User moderation[edit]

User moderation allows any user to moderate any other user's contributions. Billions of people are currently making decisions on what to share, forward or give visibility to on a daily basis.[20] On a large site with a sufficiently large active population, this usually works well, since relatively small numbers of troublemakers are screened out by the votes of the rest of the community.

User moderation can also be characterized by reactive moderation. This type of moderation depends on users of a platform or site to report content that is inappropriate and breaches community standards. In this process, when users are faced with an image or video they deem unfit, they can click the report button. The complaint is filed and queued for moderators to look at.[21]