User interface

In the industrial design field of human–computer interaction, a user interface (UI) is the space where interactions between humans and machines occur. The goal of this interaction is to allow effective operation and control of the machine from the human end, while the machine simultaneously feeds back information that aids the operators' decision-making process. Examples of this broad concept of user interfaces include the interactive aspects of computer operating systems, hand tools, heavy machinery operator controls and process controls. The design considerations applicable when creating user interfaces are related to, or involve such disciplines as, ergonomics and psychology.

For the boundary between computer systems, see Interface (computing).

Generally, the goal of user interface design is to produce a user interface that makes it easy, efficient, and enjoyable (user-friendly) to operate a machine in the way which produces the desired result (i.e. maximum usability). This generally means that the operator needs to provide minimal input to achieve the desired output, and also that the machine minimizes undesired outputs to the user.

User interfaces are composed of one or more layers, including a human-machine interface (HMI) that typically interfaces machines with physical input hardware (such as keyboards, mice, or game pads) and output hardware (such as computer monitors, speakers, and printers). A device that implements an HMI is called a human interface device (HID). User interfaces that dispense with the physical movement of body parts as an intermediary step between the brain and the machine use no input or output devices except electrodes alone; they are called brain–computer interfaces (BCIs) or brain–machine interfaces (BMIs).

Other terms for human–machine interfaces are man–machine interface (MMI) and, when the machine in question is a computer, human–computer interface. Additional UI layers may interact with one or more human senses, including: tactile UI (touch), visual UI (sight), auditory UI (sound), olfactory UI (smell), equilibria UI (balance), and gustatory UI (taste).

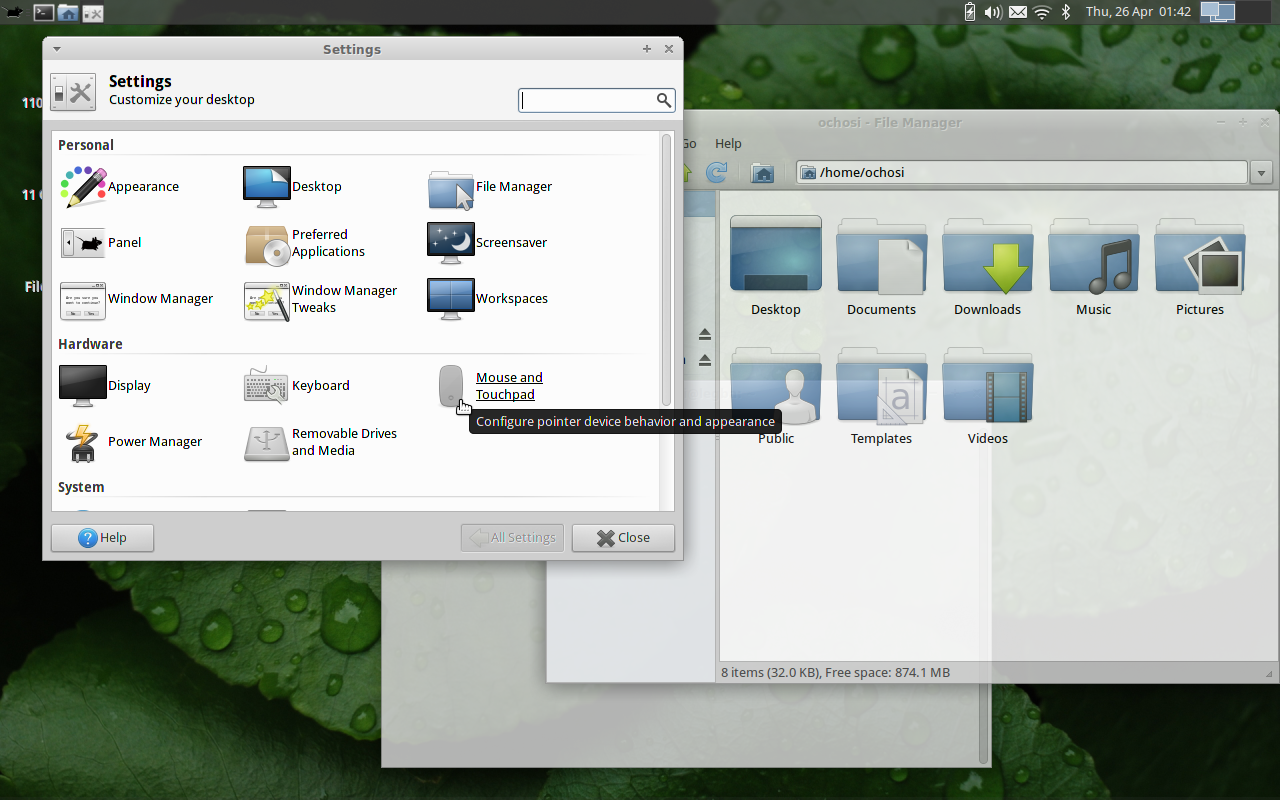

Composite user interfaces (CUIs) are UIs that interact with two or more senses. The most common CUI is a graphical user interface (GUI), which is composed of a tactile UI and a visual UI capable of displaying graphics. When sound is added to a GUI, it becomes a multimedia user interface (MUI). There are three broad categories of CUI: standard, virtual and augmented. Standard CUI use standard human interface devices like keyboards, mice, and computer monitors. When the CUI blocks out the real world to create a virtual reality, the CUI is virtual and uses a virtual reality interface. When the CUI does not block out the real world and creates augmented reality, the CUI is augmented and uses an augmented reality interface. When a UI interacts with all human senses, it is called a qualia interface, named after the theory of qualia. CUI may also be classified by how many senses they interact with as either an X-sense virtual reality interface or X-sense augmented reality interface, where X is the number of senses interfaced with. For example, a Smell-O-Vision is a 3-sense (3S) Standard CUI with visual display, sound and smells; when virtual reality interfaces interface with smells and touch it is said to be a 4-sense (4S) virtual reality interface; and when augmented reality interfaces interface with smells and touch it is said to be a 4-sense (4S) augmented reality interface.

There is a difference between a user interface and an operator interface or a human–machine interface (HMI).

In science fiction, HMI is sometimes used to refer to what is better described as a direct neural interface. However, this latter usage is seeing increasing application in the real-life use of (medical) prostheses—the artificial extension that replaces a missing body part (e.g., cochlear implants).[7][8]

In some circumstances, computers might observe the user and react according to their actions without specific commands. A means of tracking parts of the body is required, and sensors noting the position of the head, direction of gaze and so on have been used experimentally. This is particularly relevant to immersive interfaces.[9][10]